Inside NASA’s archives: Meet the team restoring astronomical history (Image Credit: Space.com)

Precious data from space missions, going back decades, is being carefully restored and archived by scientists at NASA’s Space Science Date Coordinated Archive, allowing researchers today to make new discoveries by delving into the history books.

“What’s surprising is how much of this information is either lost or at least not in a condition that anybody can use it in,” planetary scientist David Williams of the National Space Science Data Center Archive (NSSDCA) told Space.com. “We’ve got tons of photography, reels of film from various missions, a lot of microfilm and microfiche. We’re slowly working through it.”

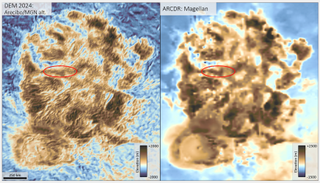

The detective work required to hunt through archives, basements and forgotten store-rooms at institutions all across the United States to find and restore this old data couldn’t be more important; the unearthed data can still be used by researchers today to help guide missions of the future. For instance, take the team working on NASA’s DAVINCI (Deep Atmosphere Venus Investigation of Noble gases, Chemistry, and Imaging) mission, which will begin its trip to Venus in the early 2030s. It will be the first dedicated, American-led Venusian mission since the 1990s (Europe and Japan have both been back to Venus since then). One of its targets on the carbon-dioxide enshrouded planet is a continent-sized plateau called Alpha Regio, which is a gigantic tessera of deformed surface features linked to volcanism and possibly impacts.

So, to know what DAVINCI should look for on Alpha Regio, the mission’s team of scientists has gone back to the past, applying modern-day analysis and machine-learning techniques to data from NASA’s Magellan Venus mission from the early 1990s, coupled with some archive Arecibo radar data. The goal is to build a new map of Alpha Regio and identify puzzling geological structures on the tessera that may have gone unnoticed. In a similar use of old data, earlier this year, researchers found evidence for volcanic activity in Magellan data from 1990 to 1992.

Meet the archivists

None of this old data would be available and in a usable condition if it were not for the hard work of the team at the NASA Space Science Data Coordinated Archive (NSSDCA) at the agency’s Goddard Space Flight Center. The NSSDCA’s job is to restore and digitize data from all interplanetary space missions. Together, the crew hunts for lost data from some of NASA’s earliest missions, including the Apollo missions to the moon. (Other institutions are responsible for the data from other types of missions; for instance the Space Telescope Science Institute, which manages the Hubble and James Webb space telescopes, is also tasked with archiving the data from the observatories.)

As the head of the NSSDCA, David Williams probably has one of the best jobs at NASA. His role isn’t just to be an archivist; it’s also to play detective, figuring out where missing data might be lurking, then working out what that data is telling us and how it should be formatted so that it can be useful to future generations of astronomers.

“I love that aspect of it,” he enthused in an interview with Space.com. “Trying to dig up the data and figure something out is when I have the most fun here.”

Prior to about the mid- to late-1980s, there were no rules on how to archive precious astronomical data collected by space missions. In fact, some researchers didn’t even bother to archive their data at all. By the late 1980s, the authorities at NASA’s Planetary Data System (PDS), which is the one-stop shop for planetary science data, flexed their muscles and began insisting on an archival process, even to the point of denying funding to researchers who didn’t archive their data. The job of making sure things are archived properly falls to Williams and the NSSDCA.

“Now you know that if there’s been a mission since Magellan [from 1989] or thereabouts, the data are going to be well documented and complete with very few exceptions,” he said. However, for missions before then, the availability and quality of the data can be a crapshoot.

“Back even to the mid-80s really, there were no systematic rules about archiving data,” said Williams. “This is something that I learned big time when I started doing this.”

Now, when applying for funding, researchers must not only submit all their raw data, but also the documentation that explains what the data is measuring and how it should be displayed. Researchers must undergo a “data review,” where Williams and his colleagues scrutinize the data and documentation and make sure they have everything they need — anything that isn’t sufficiently laid out gets sent back to the researchers to be fixed.

Still missing data

There’s still lots of data from experiments and missions from before this rigorous validation scheme was introduced that just hasn’t been archived, has incomplete documentation, or is even simply missing, perhaps permanently.

“The documentation is just as important as the data. We used to get boxes of tapes with a cover letter, ‘here’s all the data from such-and-such mission,’ and we’d wonder: ‘What are we supposed to do with this?'” said Williams. “For the really old stuff, there’s not even anyone to talk to about it, so you have to find out yourself how the experiment worked.”

Today everything is digitized and backed-up, but the original source — be it a print-out, microfilm or nine-track tape — is retained, contained in an “archive information packet” that is basically just a wrapper with the data’s ID. Because boxes of print-outs can take up lots of room, back in the old days, many of those print-outs were transferred to microfilm and microfiche (transparencies containing scaled-down images of printed items) , but now a lot of the NSSDCA staff’s time is spent digitizing these microfilms, and, in the process, have discovered alarming gaps and vulnerabilities in the archive.

“And I realized that I’m sitting there with these boxes of microfilm, and they are the only thing that’s left from that Viking biology experiment”

David Williams

“About 15 years ago, we got a request from someone for the data from the Viking biology experiment,” said Williams. This was an experiment on the two Viking landers from 1976 that was designed to test samples of Martian dirt for the presence of microbial life. Williams believed that all the biology experiment data was on microfilm, but when he sat down in the archive to sift through the documentation pertaining to the experiment to try and find the requested data, he couldn’t find it. Perhaps it had been discarded, or gone bad, mused Williams.

“And I realized that I’m sitting there with these boxes of microfilm, and they are the only thing that’s left from that Viking biology experiment,” he said. “If something happened to these boxes of microfilm they would be gone. So I said let’s just get this digitized right now and give copies to everyone we know and make sure it can’t get lost. It was a scary thought, and I do believe that from the older missions there is data that has been lost and we’re never going to find it.”

The weird story of Apollo’s ALSEP stations

Sometimes, the story behind lost data is more bizarre than it just getting chucked out in the trash.

Take the case of the ALSEP stations. Short for Apollo Lunar Surface Experiment Packages, these were science stations left on the moon by every Apollo mission that landed after Apollo 11 (Apollo 11 deployed a simpler package, but it was still basically the same thing). The ALSEP stations recorded things such as temperature, moonquakes, cosmic-ray exposure, heat flow in the sub-surface, the moon’s gravitational and magnetic field, and more. The ALSEP stations took these readings continuously, beaming them back to Earth until the stations were shut down in 1977.

Their data had been stored on magnetic tape at the University of Texas at Galveston — and then the Marine Mammal Protection Act happened.

What does that have to do with astronomical data? “This is what makes it so weird!” said Williams. Previously, magnetic tapes had used whale oil as a lubricant to prevent them from drying out or getting stuck in the tape players.

“It turned out that whale oil was the perfect lubricant for computer tapes, because it was non-conductive, it didn’t harm the magnetic substrate, it did have magnetic properties and didn’t mess up the tape-reading machines,” said Williams.

With the (quite right) passing of the Marine Mammal Protection Act, whale oil could no longer be used. That was okay; a company had anticipated this and devised a new lubricant to replace the whale oil. But then, six months later, it was discovered that the new lubricant was drying out the magnetic tapes and causing them to rip in the tape players.

That left NASA in a bind. Data was coming down from satellites and interplanetary missions all the time, and they needed tapes to record that data. There was no time to wait for someone to come up with a new lubricant, as they needed somewhere to store all this new incoming data.

“So, they started pillaging old tapes that still had whale oil on them and writing over them,” said Williams. “And at some point someone found the ALSEP tapes and wrote over them, so now they’re gone.”

“All the other ones were gone, and all because of whale oil!”

David Williams

All that survived were a bunch of tapes that contained about two weeks’ worth of data from the ALSEP stations that some researchers must have borrowed from the archive before the pillaging began.

“All the other ones were gone,” said Williams. “And all because of whale oil!”

The Iron Mountain

Thankfully, today we do not need to rely on whale oil, or magnetic tapes. All new data is digitized, and old data is in the process of being digitized. It’s all now on the cloud, of course, but hard copies still exist in two locations: one, at the NSSDCA, and two at a location known as “Iron Mountain.”

Iron Mountain is actually the name of a company that owns “a big archive that everybody uses and they are about 20 or 30 miles away [from NASA Goddard in Maryland, near Washington D.C.],” said Williams. Their name brings to mind a huge, impenetrable mountain inside which, stacked from floor to ceiling, are servers, boxes and stacks of magnetic tapes.

That’s not actually too far from what the truth — or, at least, what the truth used to be.

“Originally they did have a ‘mountain’ in Pennsylvania, a giant mine that they used to store stuff so that it could be completely protected from anything that ever happened,” said Williams. “And that’s why it’s called Iron Mountain.”

Short of nuclear war, the data should be safe. Even if, as Williams joked, a tornado wiped out NSSDCA, the backed-up data at Iron Mountain would be secure. If something so large happened that it took out both NSSDCA and Iron Mountain, then we’d probably have bigger things to worry about than losing some astronomical data, laughed Williams.

Moving with the times

A bigger threat than natural disasters these days are the dreaded computer software or media updates. We’ve all seen them — I’d bet you’ve updated a program to the latest version at some point, only to watch it fail to open your oldest files. Or, consider the stacks of VHS tapes now consigned to landfill simply because the way that we consume media has moved on.

Thus, essential in archiving data is making it future-proof so that we can still open and read it 50 or 100 years down the line.

“We try and keep up with the media, because what happens is that the media lasts longer than the actual machines to read the media,” said Williams. “We have all the nine-track tapes but no nine-track tape readers that work anymore.”

More broadly, “here is a natural competition between making the data available in modern format, and making something that someone in the future is going to be able to open, and not say, ‘oh, I don’t know what a Google spreadsheet is,'” he said.

Because the software changes all the time, at the NSSDCA, they try to use the simplest thing, such as an ASCII table. ASCII stands for American Standard Code for Information Interchange. It uses numbers to represent characters with no formatting and is widespread in computing and on the Internet, and can be used by any software, as opposed to an Excel spreadsheet, for example, “which might not even exist in the future,” said Williams.

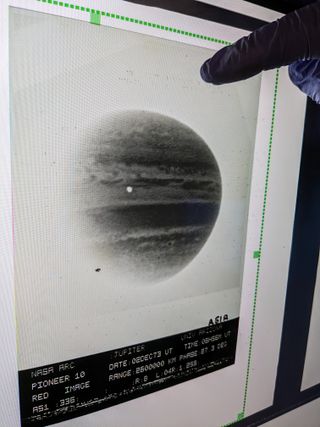

Currently, Williams and his team are sorting through, restoring and digitizing old data from the NASA Pioneer Venus mission, which operated between 1978 and 1992, in anticipation of the forthcoming DAVINCI mission, VERITAS (Venus Emissivity, Radio Science, InSAR, Topography, and Spectroscopy) endeavor and Europe’s EnVision mission to Venus, all hopefully launching in the late 2020s and early 2030s.

“We think a lot of that data might be useful,” said Williams. Indeed, as we saw at the top of this article, the DAVINCI team is already using it.

Researchers are looking back at old data all the time, applying new processing and analyzing techniques to it to tease out new information. Who knows what discoveries still await us in measurements that were made decades ago?

As the keeper of these secrets, it is all thanks to David Williams and his team for making this data available for posterity.